Speech recognition in Smart Industry

This scenario refers to Smart Industry, where Deep Learning is used for speech recognition. The objective of this use case is to develop an embedded speech recognition system that would activate/deactivate PLC-controlled tooling machinery or collaborative robot in an industrial environment, without relying on a cloud backend.

Why?

Both the speech and the voice recognition markets are expected to grow quite fast from here to 2022 and voice-activated or voice-controlled devices are booming in the recent years thanks to the evolutionary step that voice recognition and speech recognition technologies had performed. It is generally true that, as computing devices of all shapes and sizes increasingly surround us, we will come to rely more on natural interfaces such as voice, touch, and gesture. Despite visual and audio perception are of paramount importance, we can say that speech has historically had a high priority in human communication, being developed long before the introduction of writing. Therefore, it has the potential to be an important mode of interaction with computers and machinery. Moreover, speech can be used at a distance, making it extremely useful for situations where the operator has to keep hands and eyes somewhere else.

In the past, developing an intelligent voice interface was a complex task – feasible only if a dedicated development team inside a major corporation like Apple, Google or Microsoft could be made available to system designers. Today, however, due to the emergence of a small but growing number of cloud- based APIs, it is now possible for developers to build an intelligent voice interface for any app or website without requiring an advanced degree in natural language processing. Those API, however, require to have an available, high-speed, computing intensive cloud backend to elaborate all the voice and speech features including noise extraction, noise suppression, voice pattern recognition, user identification, emotion detection, word recognition, semantic pattern elaboration and so on, with expected latencies and loss in precision that could impact critical applications such as those related to machinery interaction.

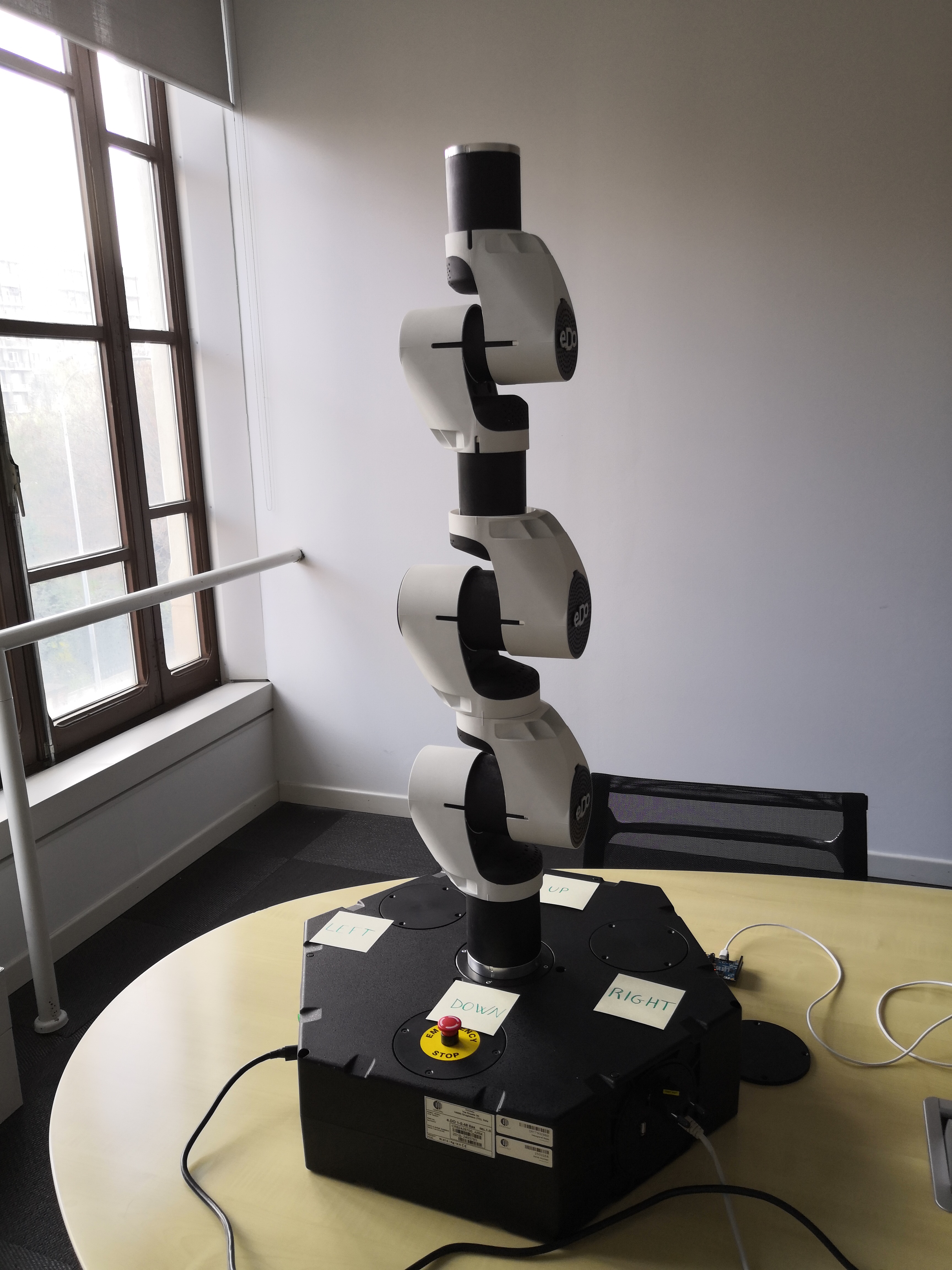

Speech recognition used to control a robotic arm (e.Do by Comau) keeping hands free

How?

The ALOHA project intends to address the needs of this scenario by leveraging on DL algorithms. DL seemed to have finally made speech recognition accurate enough to target not strictly controlled environments. Thanks to DL, speech recognition may become the primary interaction way among human and computer, or machinery as in the given scenario. DL has brought Baidu to improve speech recognition from 89% accuracy to 99% accuracy. Speech technologies will go far beyond Smartphones and may be quite soon on home appliances and wearable devices.

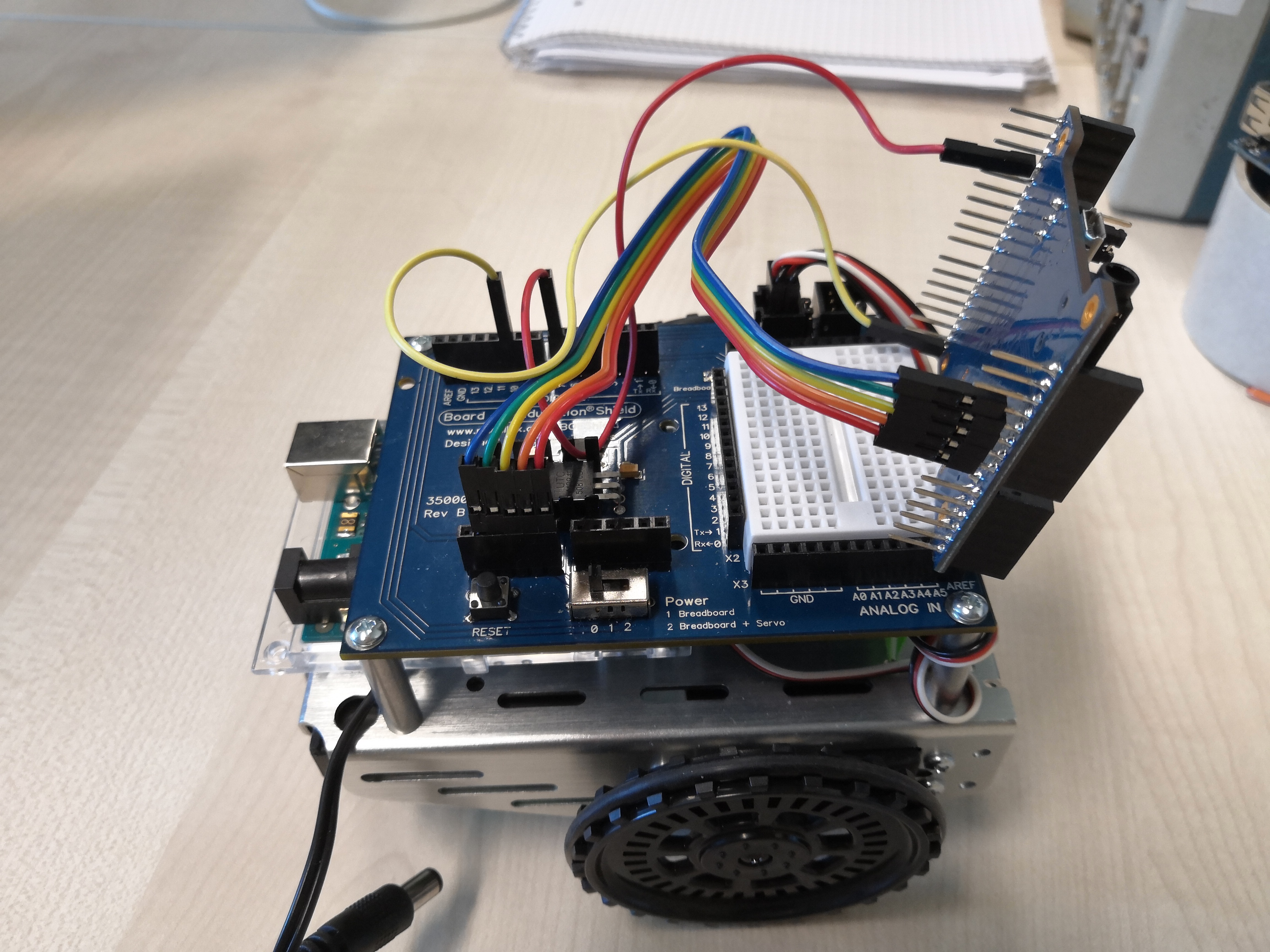

The usage of neural networks in talker-independent continuous speech recognition is not a new science and, in the years, DL has become a resourceful solution for such a problem. Using the ALOHA tool flow, the consortium intends to make designers life easier allowing them to characterize and deploy an embedded voice recognition system capable of natural language processing in critical scenarios, such as an industrial application where real-time processing is mandatory to enable fast and safe human-machinery interaction.

Starting from existing DL algorithm, suitable for voice recognition and speech pattern recognition, energy-aware and secure software code is going to be automatically deployed over optimal hardware modules, optimized for the given functionality to provide hard real-time execution. The APIs created by means of the ALOHA framework will be used as a ground base to jumpstart the development activities and create a benchmark for Deep Learning applied to industrial environment voice recognition techniques.

Related Videos

Voice control of e.Do robotic arm using ALOHA

In this video, speech recognition is used to control a robotic arm (e.Do by Comau) keeping hands free. Two modalities are available: predefined poses or spatial mode using x, y and z coordinates. The deep neural network model is speaker independent and classifies audio into ten keywords.

Video by Santer Reply.

Read more...

Voice control in smart industry

The video presents the initial version of the smart industry demonstrator, where Deep Learning is used for speech recognition. Santer Reply, responsible for this this use case, realized, using the ALOHA toolflow, an embedded keyword spotting system to control a robot without relying on a cloud backend.

Video by Cristina Chesta, Santer Reply.

Read more...